https://paperswithcode.com/paper/streamingt2v-consistent-dynamic-and

Papers with Code - StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text

Implemented in one code library.

paperswithcode.com

Long Video Generation from Text

Text-to-video diffusion models enable the generation of high quality videos that follow text instructions, making it easy to create diverse and individual content.

However, existing approaches mostly focus on high quality short video generation (typically 16 or 24 frames), ending up with hard-cuts when naively extended to the case of long video synthesis.

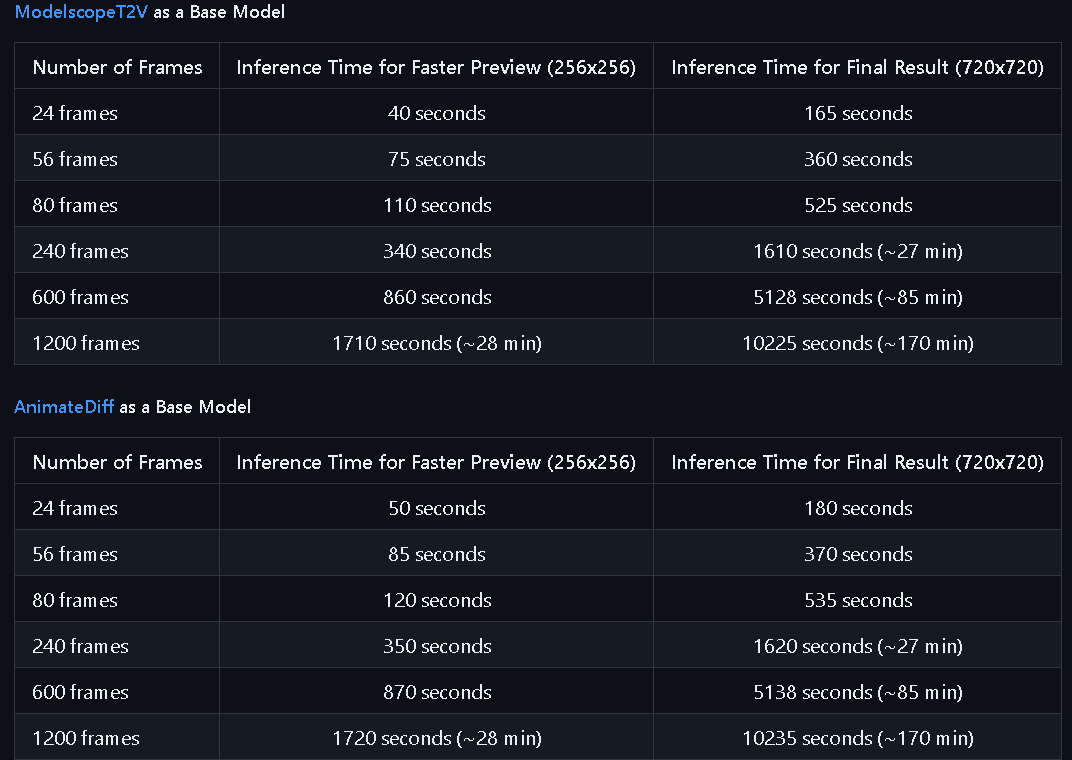

To overcome these limitations, we introduce StreamingT2V, an autoregressive approach for long video generation of 80, 240, 600, 1200 or more frames with smooth transitions.

Method overview: StreamingT2V extends a video diffusion model (VDM) by the conditional attention module (CAM) as short-term memory, and the appearance preservation module (APM) as long-term memory. CAM conditions the VDM on the previous chunk using a frame encoder .

The attentional mechanism of CAM leads to smooth transitions between chunks and videos with high motion amount at the same time. APM extracts from an anchor frame high-level image features and injects it to the text cross-attentions of the VDM. APM helps to preserve object/scene features across the autogressive video generation.

1200 FRAMES @ 2 MINUTES

"Wide shot of battlefield, stormtroopers running..."

600 FRAMES @ 1 MINUTE

"Close flyover over a large wheat field..."

240 FRAMES @ 24 SECONDS

https://streamingt2v.github.io/static/videos/240/0009_0000_Santa_Claus_is_dancing.mp4

80 FRAMES @ 8 SECONDS

"A squirrel on a table full of big nuts."

Spaces - huggingface

https://huggingface.co/spaces/PAIR/StreamingT2V

StreamingT2V - a Hugging Face Space by PAIR

huggingface.co

'IT > paper report' 카테고리의 다른 글

| ORPO (0) | 2024.05.16 |

|---|---|

| Highly Articulated Gaussian Human Avatars with Textured Mesh Prior (0) | 2024.04.25 |

| Shap-E (0) | 2024.04.03 |

| Self-Rectifying Diffusion Sampling with Perturbed-Attention Guidance (0) | 2024.03.28 |

| Mixture-of-Experts (0) | 2024.03.21 |